|

Listen to this article

|

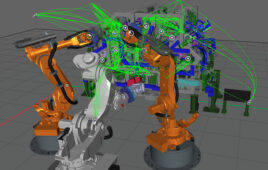

Developers can use Apple Vision Pro to teleoperate robots for capturing demonstration data. Source: NVIDIA

At SIGGRAPH today in Denver, NVIDIA Corp. announced research and offerings for simulation, generative artificial intelligence, and robotics. The company said it is providing a suite of services, models, and computing platforms to enable robotics and AI designers “to develop, train, and build the next generation of humanoid robotics.”

“The next wave of AI is robotics, and one of the most exciting developments is humanoid robots,” stated Jensen Huang, founder and CEO of NVIDIA. “We’re advancing the entire NVIDIA robotics stack, opening access for worldwide humanoid robotics developers and companies to use the platforms, acceleration libraries, and AI models best suited for their needs.”

The company is presenting 20 research papers at SIGGRAPH. Rev Labaredian, vice president of Omniverse and virtualization strategy, said in a press briefing that NVIDIA has been working on graphics research since 2001, so its connection to graphics, simulation, and robotics is well-established.

NVIDIA NIMs help develop digital twins

Not only can simulation help design robots and their environments, but it can also be applied to training production systems, said NVIDIA. Its NIM microservices are pre-built containers using NVIDIA inference software, now offered as a service. The company claimed that they can reduce model deployment times from weeks to minutes.

“The time to apply generative AI is now, but it can be daunting,” acknowledged Kari Briski, vice president of generative AI software product management at NVIDIA. “Enterprises need a fast path to production for return on investment. … This led to NVIDIA NIMs to standardize deployment of AI models, and it’s based on CUDA to run out of the box.”

“Two new AI microservices will allow roboticists to enhance simulation workflows for generative physical AI in NVIDIA Isaac Sim, a reference application for robotics simulation built on the NVIDIA Omniverse platform,” the company asserted.

The MimicGen NIM generates synthetic motion data based on recordings of teleoperation using spatial computing devices such as Apple Vision Pro.

The Robocasa NIM generates robot tasks and simulation-ready environments in NVIDIA’s OpenUSD Universal Scene Description framework for developing and collaborating within 3D worlds.

The company also announced NIM microservices to aid in the development of digital twins, as well as USD connectors to allow users to stream massive NVIDIA RTX ray-traced data sets to Apple Vision Pro.

The USD Search NIM for working with OpenUSD can perform text-based searches of 3D assets. Source: NVIDIA

OSMO allows robot orchestration from the cloud

Available now, NVIDIA OSMO is a managed cloud service that robotics developers can use to orchestrate and scale multi-stage workflows across distributed computing resources, whether on premises or in the cloud.

“OSMO vastly simplifies robot training and simulation workflows, cutting deployment and development cycle times from months to under a week,” said NVIDIA. “Users can visualize and manage a range of tasks — like generating synthetic data, training models, conducting reinforcement learning and implementing software-in-the-loop testing at scale for humanoids, autonomous mobile robots [AMRs], and industrial manipulators.”

NVIDIA workflows connect real and synthetic data

Training foundation models for humanoid and other robots typically requires large amounts of data, noted NVIDIA. Teleoperation is one way to capture human demonstration data, but it can be expensive and time-consuming, it said.

NVIDIA announced a workflow that uses AI and Omniverse to enable developers to train robots with smaller amounts of data than previously required. First, developers use Apple Vision Pro to capture a relatively small number of teleoperated demonstrations.

Then, they simulate the recordings in Isaac Sim and use the MimicGen NIM to generate synthetic datasets from the recordings. The developers can train the Project GR00T humanoid foundation model with real and synthetic data, saving time and costs, said NVIDIA.

Roboticists can then use Robocasa NIM in the Isaac Lab framework for robot learning to generate experiences to retrain the robot model. Throughout the workflow, NVIDIA said OSMO can assign computing jobs to different resources, eliminating weeks of administrative tasks.

For instance, Fourier said NVIDIA’s technology will help it generate synthetic data for training its general-purpose platform.

“Developing humanoid robots is extremely complex — requiring an incredible amount of real data, tediously captured from the real world,” said Alex Gu, CEO of Fourier. “NVIDIA’s new simulation and generative AI developer tools will help bootstrap and accelerate our model-development workflows.”

Humanoid firms jump on early access

NVIDIA’s new Humanoid Robot Developer Program offers early access to the NIMs and OSMO, as well as the latest releases of NVIDIA Isaac Sim on Omniverse, Isaac Lab, Jetson Thor compute, and Project GR00T foundation models.

The company said robotics developers including 1x, Boston Dynamics, ByteDance Research, Field AI, Figure, Fourier, Galbot, LimX Dynamics, Mentee, Neura Robotics, RobotEra, and Skild AI have already joined its early-access program.

“Boston Dynamics and NVIDIA have a long history of close collaboration to push the boundaries of what’s possible in robotics,” said Aaron Saunders, chief technology officer of Boston Dynamics. “We’re really excited to see the fruits of this work accelerating the industry at large, and the early-access program is a fantastic way to access best-in-class technology.”

NVIDIA events at SIGGRAPH include a fireside chat between Huang and Lauren Goode, senior writer at Wired, on the impact of robotics and AI in industrial digitalization. He will also have a conversation with Meta’s Mark Zuckerberg.

🦾👍🏽