|

Listen to this article

|

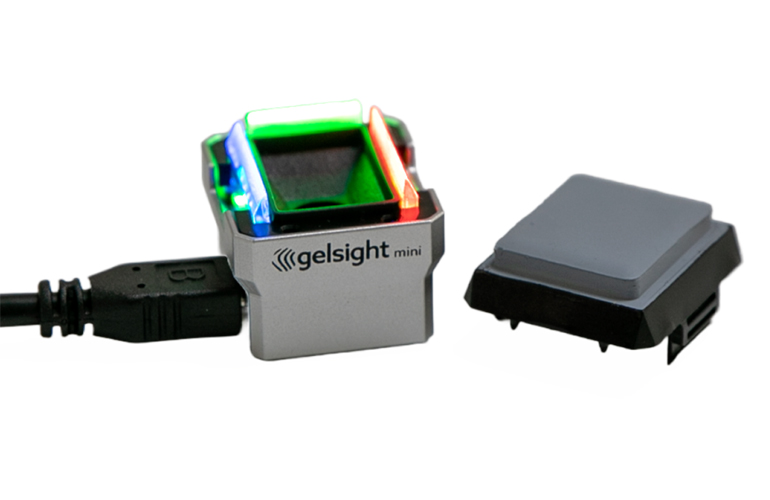

The GelSight Mini, a 3D tactile sensor that gives robots the sense of touch. | Source: GelSight

GelSight announced the release of the GelSight Mini, an artificial intelligence (AI) powered 3D sensor that can give robots a sense of touch. The sensor is small enough to be comfortable for human hands and strong enough for use in robots and cobots. It takes just five minutes for the sensor to produce sharable results out of the box.

“GelSight Mini is a first-of-its-kind, affordable, and compact tactile sensor with an easy, plug-and-play set up that lets users get to work within five minutes of taking the device out of the box,” Dennis Lang, vice president of product at GelSight, said. “We believe that GelSight Mini will reduce the barrier of entry into robotics and touch-based scanning for corporate research and development, academics, and hobbyists, while opening doors to new terrain, such as the Metaverse.”

GelSight set out to make a sensor that would provide more flexibility to roboticists than any other one on the market. The company’s sensor makes digital 2D and 3D mapping available to roboticists, and the sensor exceeds the spatial resolution of human touch. This gives researchers optimized images of material surfaces that can be useful across a broad set of industries.

The GelSight Mini relies on data captured by GelSight’s elastomeric tactile sensing platform, which leverages the Robot Operating System (ROS), PyTouch and Python. This allows users to create detailed and accurate surface characterizations that are directly compatible with familiar industry-standard software environments.

ROS compatibility, frame grabbers and Python scripts are all provided by the company, allowing users to get started right away with unique AI and computer vision tasks, including directly creating digital twins of items to be picked using the sensor.

GelSight Mini can be used in a range of things, from industrial-style two-finger grippers, to bionic hand research and developments. The sensor’s compact design, and GelSight’s provided 3D CAD files of adapters for integration, makes it easy to install into an existing system.

The company is a spinoff from the Massachusetts Institute of Technology (MIT), where the company’s founders developed technologies in the fields of 3D imaging, perceptual modeling and signal processing. The company was founded in 2011.

Tell Us What You Think!