|

Listen to this article

|

The researchers created a robotic hand with tactile sensors in its four fingertips. It can rotate objects in any direction or orientation. | Credit: University of Bristol

Researchers at the University of Bristol last week said they have made a breakthrough in the development of dexterous robotic hands. The research team, led by Nathan Lepora, professor of robotics and artificial intelligence, explored the limits of inexpensive tactile sensors in grasping and manipulation tasks.

Improving the dexterity of robot hands could have significant implications for automated handling goods for supermarkets or sorting through waste for recycling, said the team.

OpenAI started then stopped its gripper research

OpenAI explored robotic grasping back in 2019, however, the team was disbanded as the company shifted its focus to generative AI. OpenAI recently announced that it is resurrecting its robotics division but hasn’t announced what this division will work on.

Lepora and his team investigated the use of inexpensive cellphone cameras, embedded in the fingertips of the gripper fingers, to image the tactile interaction between the fingertips and the object in hand.

A number of other research teams have used proprioception and touch sense to look into the in-hand object-turning task in other projects. However, this has only been used to rotate an object around the main axes or to teach different rules for random rotation axes with the hand facing upward.

“In Bristol, our artificial tactile fingertip uses a 3D-printed mesh of pin-like papillae on the underside of the skin, based on copying the internal structure of human skin,” Lepora explained.

Bristol team studies manipulating items under the gripper

Manipulating something with your hand in various positions can be challenging because your hand has to do finger-gaiting while keeping the object stable against gravity, stated Lepora. However, the rules can only be used for one-hand direction.

Some prior works were able manipulate objects with a hand looking downwards by using a gravity curriculum or precise grasp manipulation.

In this study, the Bristol team made big steps forward in training a unified policy to rotate items around any given rotation axes in any hand direction. They said they also achieved in-hand handling with a hand that was always moving and turning.

“The first time this worked on a robot hand upside-down was hugely exciting as no one had done this before,” Lepora added. “Initially, the robot would drop the object, but we found the right way to train the hand using tactile data, and it suddenly worked, even when the hand was being waved around on a robotic arm.”

The next steps for this technology are to go beyond pick-and-place or rotation tasks and move to more advanced examples of dexterity, such as manually assembling items such as Lego blocks.

The race for real-world applications

This research has direct applications to the emerging and highly visible world of humanoid robotics. In the race to commercialize humanoid robots, new tactile sensors and the intelligence to actively manipulate real-world objects will be key to the form factor’s success.

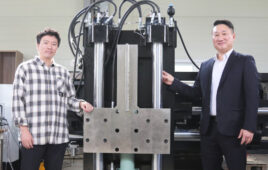

While the Bristol team is doing primary research into new materials and AI training methods for grasping. FingerVision, a Japanese startup, has already commercialized a similar finger-based camera and soft gripper design, to track tactile touch forces.

FingerVision is deploying its tactile gripper in food-handling applications with fresh meat, which can be slippery and difficult to grasp. The company demonstrated the technology for the first time in North America at the 2024 CES event in Las Vegas.

Tell Us What You Think!